Social Robot Development

| Links: Link

In this project I completely built a social robot from scratch, involving mechanical and electronic design, manufacturing and programming of the initial version of the firmware as well as the high-level software for a comprehensive demonstration of the robot’s core capabilities.

Introduction and Requirements Permalink

The evolution of robotics has significantly impacted a variety of industries, particularly in fields such as healthcare, education, and personal assistance, with social robots becoming increasingly integral. These robots are characterized by being able to understand and respond to human emotions and behaviors.

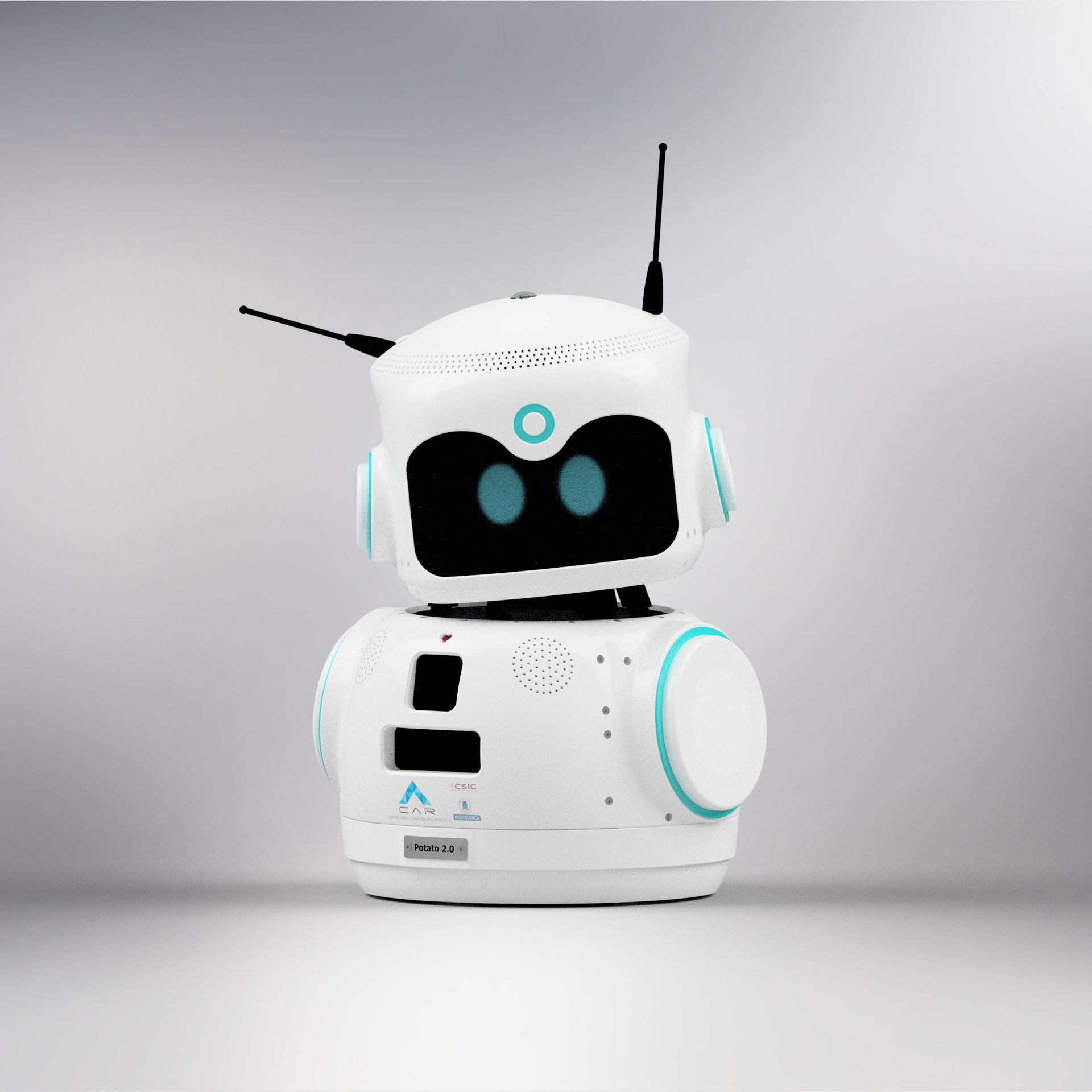

At the Center for Automation and Robotics (CAR), the Intelligent Control Group has made significant efforts to develop social robots for human-robot interaction and personal assistance. One example is Potato, a robot prototype that integrates a fuzzy logic-based emotional model with feedback from social psychology experts to achieve more natural and human-like interactions. Motivated by the successes and insights gained from the development of Potato and recognizing the growing demand for more advanced hardware, the next step was to design a new social robot.

This project focuses on the design, construction, and programming of a new advanced social robot, specifically tailored for personal assistance applications. The robot developed in this project is intended for use in scenarios such as elderly care and the management of young diabetic patients, to serve as a supportive companion. The robot’s sophisticated human-robot interaction capabilites, including its ability to express emotional responses, were designed to meet the social and psychological needs of these vulnerable populations, thereby improving their quality of life.

The project sought to address the limitations of existing models and commercial solutions, which often lack customization, flexibility, and adequate privacy protections. The design was recognized in a competititve design contest, integrates superior interaction capabilities, aesthetic appeal, and powerful processing functions, ensuring that the robot can engage effectively and naturally with users in diverse personal and social contexts.

Some of the requirements of the robot were that it should be compact, stable and easily maintainable. Additionally, it should enable power from both the plug and a battery, and it should let air circulate for refrigerating the NVIDIA Jetson TX2 processing unit properly. Its design should be user-friendly, aesthetic, customizable and modularly expandable. Its cost should be properly justified and optimized and have some minimum functionalities such as caressing the robot, have at least one interactive mobile part or enable bilateral real-time communication.

Selected Tools Permalink

The project is quite complete and includes mechanical and electronic design, material and commercial component selection, manufacturing, and programming both the low-level firmware and the high-level software application.

Design Tools Permalink

With respect to the design tools, Autodesk Inventor was mainly used for designing and generating the STL files for manufacturing the robot components. AutoCAD was used for ellatborating some technical drawings required by the laser-cutting machine. Finally, the online tool Piskel was employed for generating the face animations corresponding to different emotions.

Manufacturing Tools Permalink

The main tool that used to manufacture the robot was a MK3 Prusa 3D Printer. A laser-cutting machine was also used to cut the polystyrene rings for the LED ring’s light diffusion.

Software Tools Permalink

With respect to the software tools, the firmware was programmed in C++ while the software application was programmed in Python. The software was deployed using Docker containerization with each software module being one docker container, being the ROS-like communication between the different containers managed by RabbitMQ broker executed in a master module. Git version control was used during the whole project.

Robot Design Permalink

The finally proposed design has a total of 6 DoF, being the base 360º-rotation enabled, with an innovative 3-DoF neck spherical gear mechanism and 1 DoF for each antenna (inspired on Reachy’s antennas). It has a total of five touch capacitive sensors located on both lateral panels and the forehead, as well as five LED rings. In the front panel it has two speakers, a pulse LED, a RGB-D camera and a Leap Motion Controller for hand tracking. The main processing unit for executing vision, the fuzzy emotional model and NLP tasks is the NVIDIA Jetson TX2, connected to a 1 TB SSD and a Wi-Fi antenna. The robot uses a USB hub for connecting the different elements and also has implemented a wattmeter for power consumption estimation, and a RTC module.

In the back body panel, by removing a magnetically attached cover a ports panel can be accessed. This panel includes two USB-C ports, one Ethernet port, a protection fuse, and different switches and buttons. An Arduino Giga has been used as microcontroller for managing the different implemented actuators and sensors. By removing the right-side cover also magnetically attached, the battery can be extracted for recharging it. In the top of the head are located a LDR ambient light sensor and a 4-microphone array to detect sound in any direction. Finally, the robot is easily expandable through two 6-pin ports located at both sides of the head that enable to create custom additional modules. In the case of this work, three modules have been designed: a flashlight module (inspired by Disney Research’s robot), an air quality and hazardous gas detector sensor module, and finally a NFC module. The following video showcases an initial version of the design with a SPM neck mechanism that was finally substituted by the spherical gear custom mechanism:

Robot Manufacturing Permalink

The robot has been mostly manufactured in PETG due to its higher mechanical and thermical resistance when compared with PLA. In addition to this, polystyrene has been used for the LED light-diffusing rings, silicone for the touch sensors, anti-slip foam adhesives for the base, a nylon elastic textile mesh for covering the neck and a PVC panel for the face. In addition to the 3D printing process, the exterior panels have been post-processed through putty application, sanding, priming and painting. The conections between the Arduino and the different elements have been done through a custom protoboard, also connecting the Arduino to the Dynamixel shield that powers the motors of the robot. Custom Dupont-type wires have been manufactured for connecting the different hardware components.

System Architecture and Firmware Development Permalink

The system architecture is constituted by four separe layers, from bottom to top: the human-robot interface layer, the hardware layer, and the firmware and software layers. A custom firmware has been developed in C++ for the robot using a hierarchic node structure and a serial port manager. The firmware enables the software application to send commands to the robot actuators in both JSON and G-code format, and is built in such a way that each node recursively checks if the new command affects it and if it needs to take any action. Recursivity is also applied during node configuring, error managing, activation, and deactivation, in such a way that it can be easily defined which nodes are to be used from a single file.

Simulation Environment Permalink

A URDF model was built for the robot and then ROS 2 packages were programmed for the description, control and simulation of the robot in Gazebo Sim Harmonic. A simple four-emotional-state machine demo was programmed as an example of high-level application, though the plan in the future is to expand it adding LLM and vision modules for higher functionality as well as a fuzzy logic emotional model. The following video showcases the Gazebo Simulator GUI:

Final Result Permalink

The project has succesfully achieved to create a modular and robust design capable of advanced interaction capabilities. The design also prioritized emotional engagement, with the successful development and integration of animated emotional expressions into the robot’s interaction system, demonstrating its potential to connect with users on a deeper level. The total cost of the robot was slightly below 3.500 €, though it could be furtherly optimized in future versions through an appropriate procurement strategy. While there are areas for improvement, the results achieved represent a significant contribution, offering numerous opportunities to continued research and development. The project’s success in meeting its objectives underscores its potential to advance the capabilities of social robots in real-world applications such as healthcare, entertainment and companionship, personal assistance, education and child development, etc.